TensorFlow Dev Summit is an annual conference held by Google collecting a bunch of topics around TensorFlow framework. I usually watch the conference via live stream on YouTube. But fortunately, I got a chance to attend the conference this time. There is a sear number of exciting announcements in the conference so I list up the interesting items in this article.

Table Of Contents

- TensorFlow 2.0

- TensorFlow Lite

- TensorFlow.js 1.0

- Others

TensorFlow 2.0

TensorFlow 2.0 alpha has just been released today. The biggest change introduced by the version is the simplified API by Keras and default eager mode. It was a little hard to write the complicated control flow as a TensorFlow graph in the past. You need to be familiar with tf.where or tf.select in order to write the conditions. That makes programmers have a difficult time to write a code executing what they want to.

From 2.0, you can use tf.function to write a complex control flow as TensorFlow graph. tf.function is just an annotation to be attached to the Python function. TensorFlow compiler automatically resolves the dependency on the Tensor and create a graph.

@tf.function

def f(x):

while tf.reduce_sum(x) > 1:

x = tf.tanh(x)

return x

f(tf.ranfom.uniform([10]))

In the above example, function f will be a TensorFlow graph because it depends on the given tensors, a and b even we don’t define the ops for while control flow. That makes the development far easier because we can write the control flow of TensorFlow graph as we write the Python code. It is achieved by overloading some method like __if__ or __while__ under the hood.

It also the necessity of tf.control_dependencies which was needed to update multiple variables properly. It contributes to reducing the complexity of graph construction too.

So overall you do not need to write the following things anymore.

- tf.session.run

- tf.control_dependencies

- tf.global_variables_initializer

- tf.cond, tf.while_loop

Please the here to look into more detail about TensorFlow 2.0 and Autograph feature.

Improvements of TensorFlow Lite

One amazing thing I found in the conference was the TensorFlow project puts a significant amount of resource to the edge computing which is happening on the mobile device, wearable, browsers, and smart speakers. TensorFlow Lite is a symbolic product to accelerate the ML in Edge device. The ML in edge device is thought to be required by the following reasons.

- Fast Interactive Application

- Data Privacy

By running the ML application on the client side, we can eliminate the overhead of sending the data between server and client. It is beneficial especially in the environment where we have a limited amount of network resource or bandwidth. It also protects data privacy by avoiding to send data to the server. So the demand for the ML in edge device is growing more and more.

TensorFlow Lite is a project to create a lightweight TensorFlow model running in the edge device. By delegating the processing to Edge TPU, they achieve 62x faster inference time at maximum combining with quantization.

They have also a super tiny model that is only tens of kilobytes so that we can put it into the microcontrollers. Sparkfun is an edge demo board powered by TensorFlow. Exciting thing is that we could get the board as a gift of attending the conference.

This kind of gift always makes me fun because we can try to use what we’ve learned. So I’m going to try the tiny model running on the microcontroller later. In my opinion, the evolution of the ML in the edge device is the most interesting field. Please take look into the video for more detail around TensorFlow Lite.

TensorFlow.js 1.0

The reason why I’m involving with TensorFlow project is this. I keep contributing to TensorFlow.js since it has been published. (It was named deeplearn.js initially) It gave me an opportunity to write a book, “Deep Learning in the Browser”. Evolving the project I’m involved game me a great joy.

Now it has been released as 1.0 reaching a kind of milestone. In addition to core components, there are multiple supporting libraries around TensorFlow.js.

Those libraries enable us to have a similar experience using TensorFlow core libraries. It must accelerate the application development in the client side supporting JavaScript runtime. Actually, TensorFlow.js project will support various kind of platform such as Electron and React Native so that we can use the same application code in many platforms.

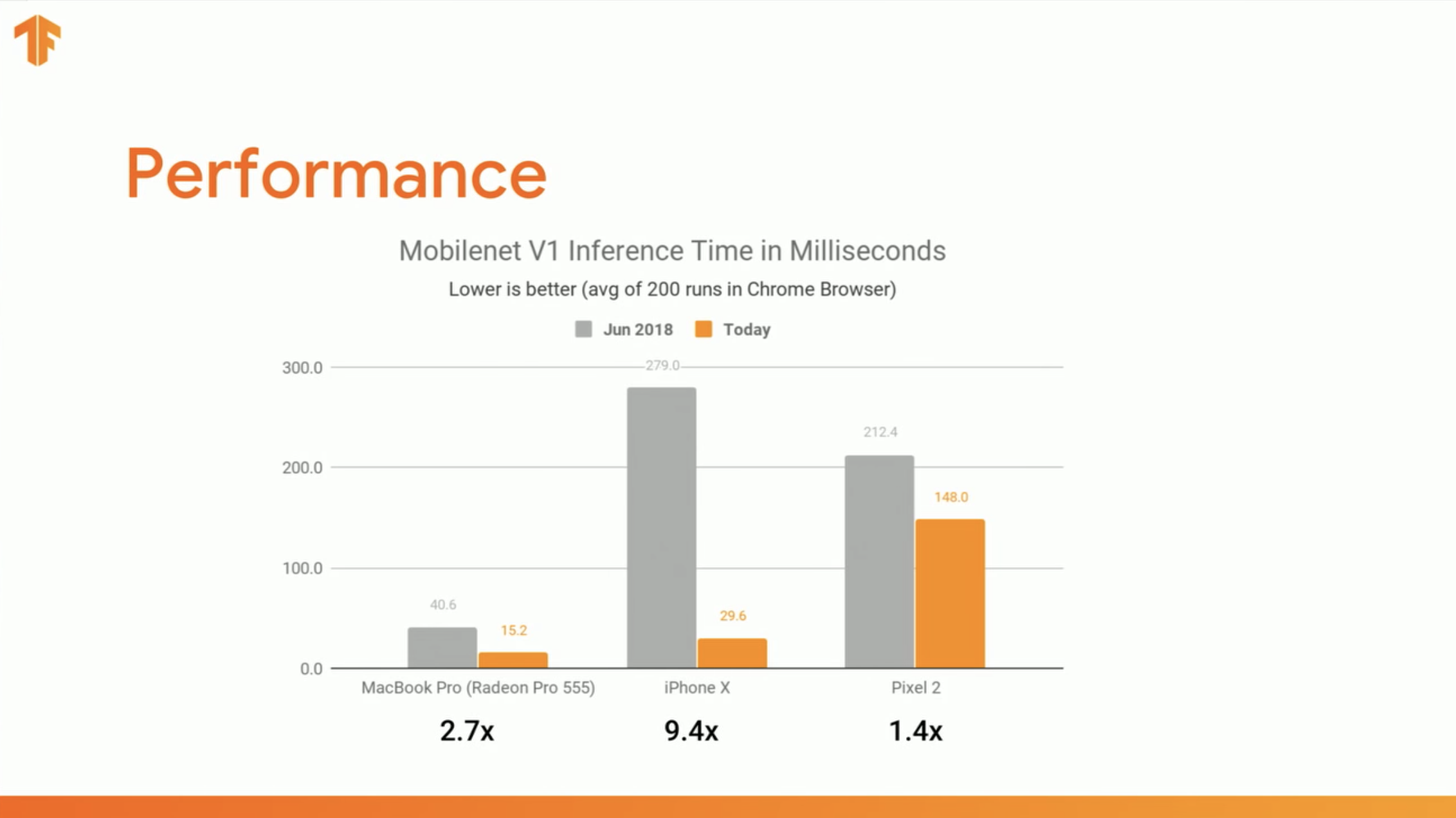

Here is another interesting slide. The performance of TensorFlow.js in Chrome browser is becoming faster since its initial release. Therefore, TensorFlow.js can be said to be a production-ready platform that supports client-side ML application sufficiently.

I also had a chance to talk with TensorFlow.js team members about the enhancements and development plan happening in near future. At the same time, I’m looking forward to these kinds of things, I want to contribute these things to make TensorFlow.js more powerful. Here is the video about the announcement of TensorFlow.js 1.0.

Others

Last but not least, I’m going to introduce some other changes I found at the conference.

- Released tf-agents to accelerate reinforcement learning by using TensorFlow

- Tensorboard can be embedded in Google Colab and Jupyter notebook

- The new conference TensorFlow World will be held Oct 28-31

- Two new online courses are available to learn TensorFlow at Cousera and Udacity

The full videos of presentation introduced in the conference are available on YouTube. Please take a look if you want to know further.

Thanks!